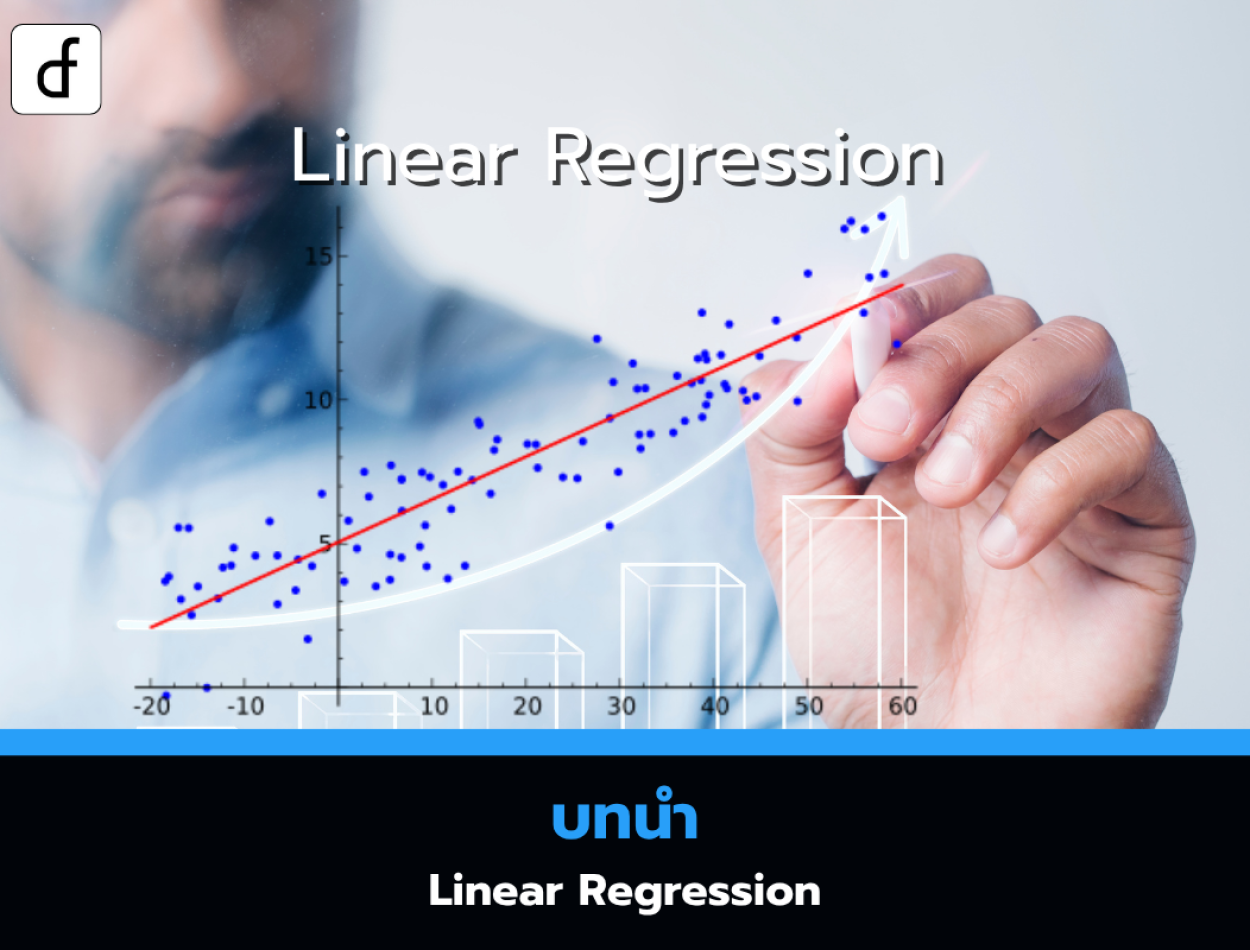

Linear Regression: An Introduction

2025-05-27 09:35:22

Linear Regression is one of the most fundamental methods utilised within data science, with applications in both prediction and inference. Many practising data scientists have a strong grounding in statistics and linear regression will be extremely familiar to this group. However there are those who are either self-taught, have been trained at an intensive code-focused bootcamp or have a background in computer science, rather than mathematics or statistics.

For this latter group linear regression may not have been considered in depth. It may have been taught in a manner that emphasises prediction, without delving into the specifics of estimation, inference or even the proper applicability of the technique to a particular dataset.

This article series is designed to 'fill the gap' for those who do not have formal training in statistical methods. It will discuss linear regression from the 'ground up', outlining when it should be used, how the model is fitted to data, the goodness of such a fit as well as diagnosis of problems that may lead to bias within the results.

Such theoretical insights are not simply 'nice to haves' for practising data scientists. Many interview questions at some of the top data-driven employers will test advanced knowledge of the technique in order to differentiate between those data scientists who may have briefly dabbled in Scikit-Learn and those who have extensive experience in statistical data analysis.

Having a solid grounding in linear regression will also provide greater intuition as to when it is appropriate to apply a particular model to a dataset. This will ultimately lead to more robust analyses and greater outcomes for your data science objectives.

In this overview article we will briefly discuss the mathematical model of linear regression. We will then provide a roadmap for the subsequent series of articles that go into more depth on a particular aspect. We will also describe the software we will be using to strengthen our knowledge of the technique.

What is Linear Regression?

Mathematically, the linear regression model states that a particular continuous response value

Where the parameters are given by

Note that

-dimensional. This is due to the fact that we need to include P parameters plus an intercept term in the model.

Including the '1' in

Where

Informally this states that the vector of response values is equal to a matrix multiplication of the parameters with the matrix of features (n rows, one per sample, with p + 1 features per row) plus a vector of normally distributed errors.

The linear regression model thus attempts to explain the n-dimensional response vector with a much simpler p + 1-dimensional linear model, leaving n - (p + 1)-dimensional random variation in the residuals of the model.

Essentially, the model is trying to capture as much of the structure of the data as possible in p dimensions, where

The task of linear regression is to try and find an appropriate estimate of the

The roadmap below will describe OLS in detail along with some alternative fitting procedures. It will also include some of the issues that can arise when trying to apply linear regression to real world datasets.

Linear Regression Roadmap

Now that we have introduced linear regression we are going to outline how we will proceed in subsequent articles:

- Exploratory Data Analysis - We will begin by examining some datasets using exploratory data analysis (EDA). This is an essential first step in the data science workflow prior to the application of any model. This article will also serve to introduce us to the datasets we will be utilising in the subsequent articles.

- Ordinary Least Squares and the so-called Normal Equations - We will introduce one of the main methods for fitting a linear regression—the technique of Ordinary Least Squares. We will also derive the Normal Equations used in the estimation procedure. We will briefly discuss when OLS should and should not be applied.

- Maximum Likelihood Estimation - We will discuss the statistical method of Maximum Likelihood Estimation (MLE) and show that it leads to the same estimates for OLS under certain conditions.

- Stochastic Gradient Descent - The computational optimisation point of view for fitting a linear model often uses the Stochastic Gradient Descent (SGD) algorithm, which is widely utilised in machine learning (ML). We will show that under certain conditions the SGD estimate matches that for both OLS and MLE. Since SGD is used for many more complex ML models, it is appropriate to see how it works in a simpler setting of linear regression.

- Gauss-Markov Theorem - We describe the Gauss-Markov theorem and its implications for deciding when OLS is an appropriate technique for a particular dataset.

- Goodness of Fit and R-Squared - Once we have fitted a model we would like to determine if the fit is, in some sense, 'good' and how to quantify the 'goodness of fit' with further statistical methods. We will introduce the coefficient of determination ("R-Squared") as one approach for this.

- Identifiability - Real-world data contains many correlated variables. In certain situations this can render the OLS technique inadmissable. We describe how to minimise such 'identifiability' issues and how to further refine a dataset to allow model fits.

- Generalised Least Squares - If the assumption of no correlation in the residuals of the OLS fit does not hold we need to utilise an alternative fitting procedure, known as Generalised Least Squares (GLS).

- Weighted Least Squares - If the assumption of constant error variance in the model is not met—the data are heteroskedastic—then we need to utilise an alternative fitting procedure, namely Weighted Least Squares (WLS).

As more articles are published on QuantStart they will be added to this roadmap here.

Software for Linear Regression

In this series of articles we will make use of the Python programming language and its range of popular freely-available open source data science libraries. We will assume that you have a working Python research environment set up. The most common—and straightforward—approach is to install the freely-available Anaconda distribution.

We will be making use of the following Python libraries:

- NumPy - NumPy is Python's underlying numerical storage library, used extensively by most of the following libraries.

- Pandas - Pandas will be used to load and manipulate the dataset examples used within these articles.

- Statsmodels - Statsmodels is Python's primary classical statistical modelling inference and time series analysis library.

- Scikit-Learn - Scikit-Learn is a comprehensive machine learning library that emphasises prediction over inference.

- Matplotlib - Matplotlib is Python's underlying plotting library and will be used as the basis for all visualisation.

- Seaborn - Seaborn will be utilised for various statistical-oriented plots, such as pairwise scatterplots and kernel density estimates.

- Yellowbrick - Yellowbrick is a statistical machine learning diagnostics visualisation library.

Scikit-Learn is often the 'go-to' machine learning library with its own implementation of ordinary least squares regression. However we wish to emphasise the theoretical properties and statistical inference of linear regression and hence will mainly be utilising the implementation found in Statsmodels. Those who are familiar with fitting linear models in R will likely find Statsmodels a similar environment.

Summary

We have described that the variety of data science backgrounds leads to a wide variance in understanding of the theoretical basis of many statistical models.

We have motivated why learning linear regression is extremely useful, both from the point of view of interview preparation and for producing robust outcomes in data science projects.

Linear regression was briefly introduced with an accompanying learning roadmap. Finally, the software utilised in future articles was described.

Reference :Linear Regression: An Introduction

From https://www.quantstart.com/articles/linear-regression-an-introduction/

Leave a comment :

Recent post

2025-01-10 10:12:01

2024-05-31 03:06:49

2024-05-28 03:09:25

Tagscloud

Other interesting articles

There are many other interesting articles, try selecting them from below.

2024-09-10 10:41:53

2023-11-21 09:19:13

2023-11-23 01:30:37

2023-09-06 11:19:12

2023-12-28 02:01:56

2025-04-30 04:52:47

2025-05-14 08:11:56

2024-04-12 11:25:15