New AI legal framework and engagement with GDPR

2024-05-02 10:07:23

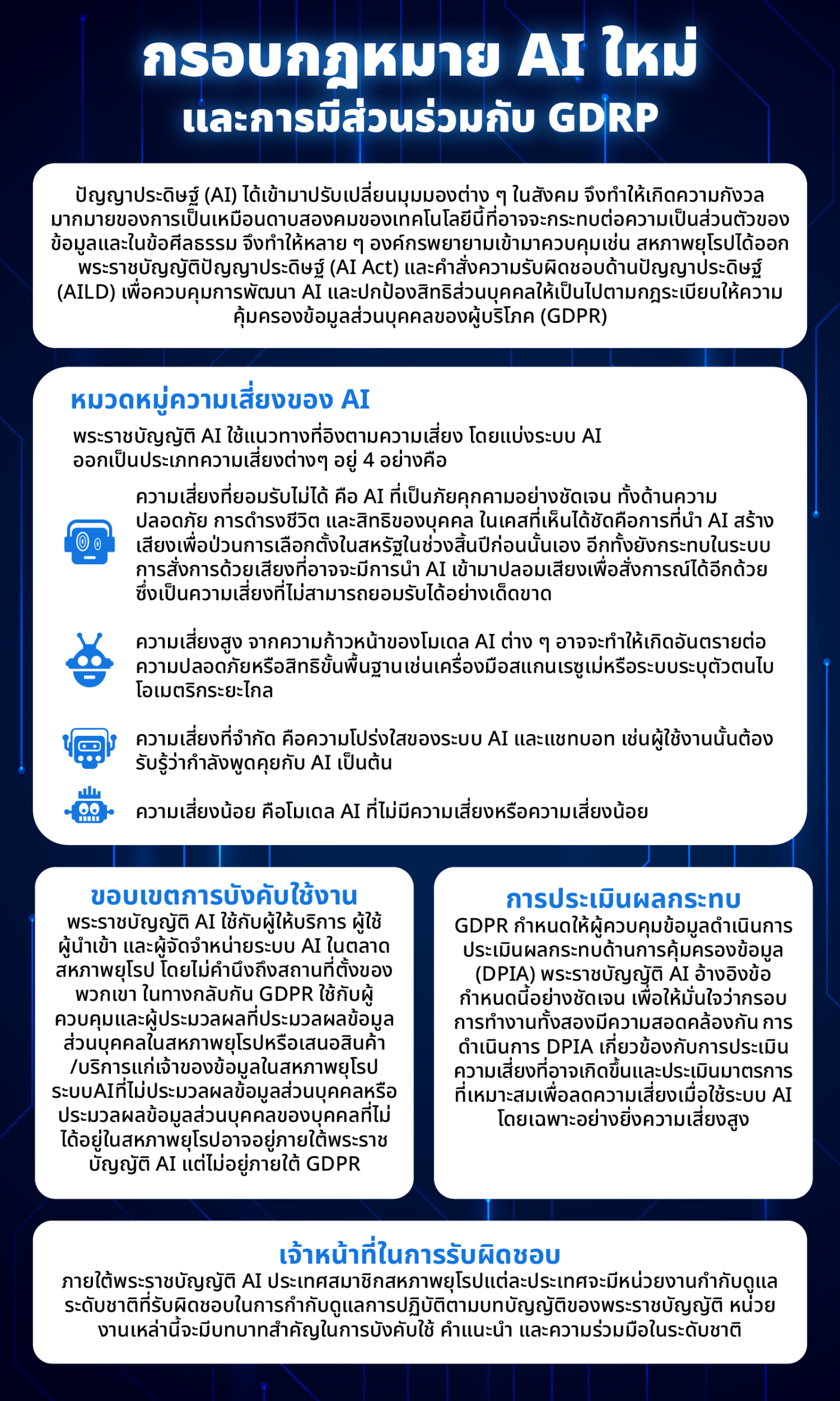

Artificial intelligence (AI) has come to change various perspectives in society, causing many concerns about the double-edged sword of this technology. That may affect data privacy and morality, causing many organizations to try to control it, as The European Union has enacted the Artificial Intelligence Act (AI Act) and the Artificial Intelligence Liability Directive (AILD) to regulate AI development and protect individual rights in line with the General Data Protection Regulation (GDPR).

To raise concerns about data privacy the article of Mr. Ian Borner has explained this in the following categories:

AI risk categories

The AI Act takes a risk-based approach, dividing AI systems into four risk categories:

-An unacceptable risk is AI which is a threat. Both in terms of safety, diving, and individual rights. An obvious case is the use of AI to create noise to disrupt the US elections at the end of the previous year. It also affects the voice command system where AI may be used to fake your voice to give commands. This is an unacceptable risk.

-High risk With the advancement of AI models, there may be dangers to safety or basic rights. Such as resume scanning tools or remote biometric identification systems.

-Limited risk is the transparency of the AI system and chatbots, such as the user must be aware that they are talking with the AI, etc.

-Low risk is an AI model that has no risk or little risk.

Scope of application

The AI Act applies to providers, users, importers, and distributors of AI systems on the EU market. GDPR, on the other hand, applies to controllers and processors that process personal data in the EU or offer goods/services to EU data subjects, AI systems that do not process data. Personalizing or processing personal data of non-EU persons may be subject to the AI Act but not GDPR.

Impact assessment

GDPR requires data controllers to carry out a Data Protection Impact Assessment (DPIA). The AI Act expressly references this requirement. To ensure that the two frameworks are consistent, conducting a DPIA involves assessing potential risks and evaluating appropriate measures to mitigate them when using AI systems, especially high risks.

Officials in charge

Under the AI Act, each EU member state has a national supervisory authority responsible for overseeing compliance with the Act's provisions. These agencies will play a key role in enforcement, guidance, and cooperation at the national level.

Leave a comment :

Recent post

2025-01-10 10:12:01

2024-05-31 03:06:49

2024-05-28 03:09:25

Tagscloud

Other interesting articles

There are many other interesting articles, try selecting them from below.

2024-02-15 03:21:09

2024-03-15 02:24:37

2024-08-06 10:34:57

2023-11-16 09:05:17

2024-03-25 03:46:48

2024-08-07 09:32:42

2023-11-07 11:39:12

2024-03-12 02:47:11

2024-03-29 10:32:46