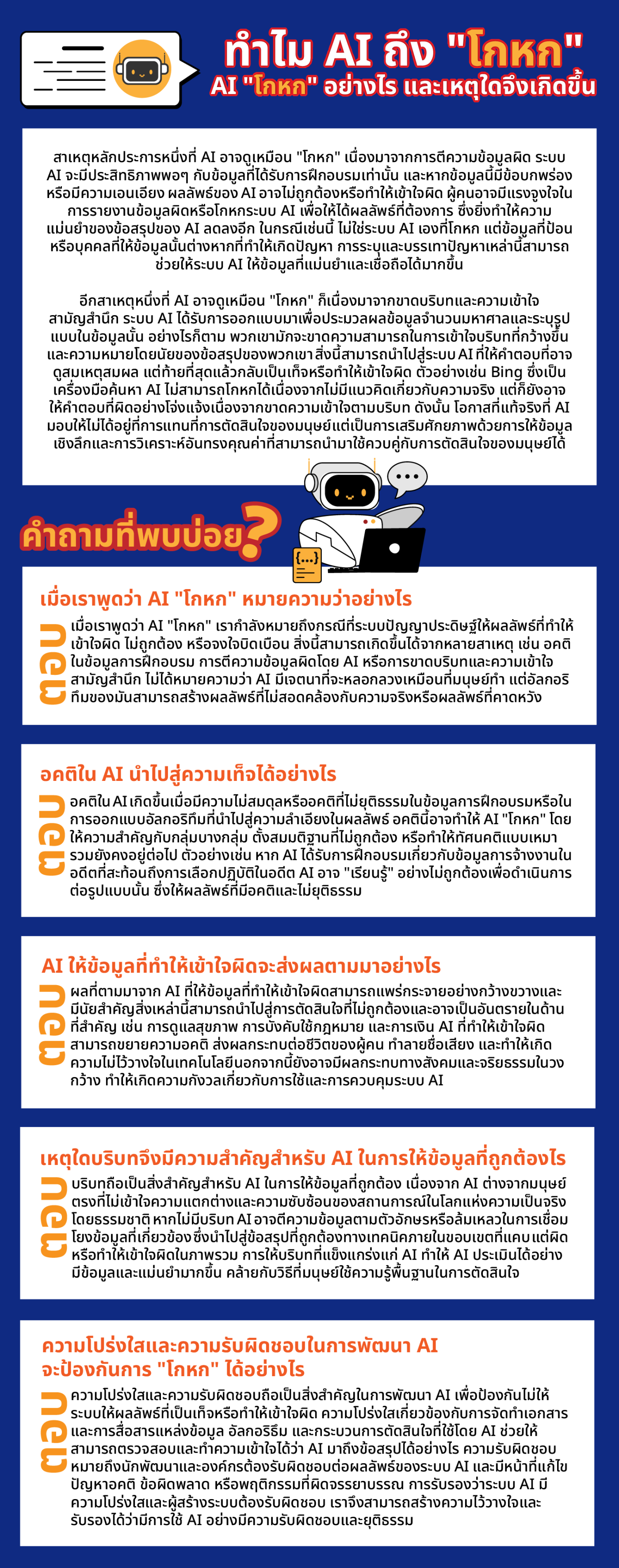

Why does AI "lie"?

2024-04-18 04:57:21

How does AI "lie" and why does it happen?

Understanding the concept of deception in AI is important to properly assess the potential risks and benefits. In general, lying involves intentionally providing false information with the intent to deceive. For an AI system to lie. That's it. It must have some quality, such as consciousness or will. This is not currently available in AI technology. However, AI systems may provide incomplete or inaccurate data due to limitations in training data or design. Although AI has the potential to help identify and reduce the impact of human bias, it is important to be aware of the pitfalls that may arise from its use.

One of the main reasons AI can appear to "lie" is due to misinterpretation of data. with trained data only if this data is flawed or biased, the AI results may be inaccurate or misleading. People may have an incentive to report misinformation or lie to AI systems to get their desired results. This further reduces the accuracy of the AI's conclusions. In such cases, it's not the AI system itself that lies, but the input or the person providing it that causes the problem. Identifying and mitigating these issues can help AI systems provide more accurate and reliable data.

Another reason why AI can appear to "lie" is because it lacks context and common sense. AI systems are designed to process large amounts of data and identify patterns in that data. However, they cannot often Understand the broader context and implications of their conclusions This can lead to AI systems providing answers that may seem reasonable. But it ends up being false or misleading. For example, Bing, an AI search engine, cannot lie because it has no concept of truth. But it can also give blatantly wrong answers due to a lack of contextual understanding. Therefore, the real opportunity AI offers isn't in replacing human decision-making. Rather, it is empowering by providing valuable insights and analysis that can be used in conjunction with human decision-making.

Frequently asked questions

Q. What do we mean when we say AI “lies”? A. When we say AI “lies,” we are referring to cases in which artificial intelligence systems produce misleading, inaccurate, or deliberately distorted results. This can happen for many reasons, such as bias in the training data. Misinterpretation of data by AI or lack of context and common sense understanding This does not mean that AI intends to deceive as humans do. However, its algorithms can produce results that are inconsistent with reality or expected results.

How can bias in AI lead to falsification? A. Bias in AI occurs when there is an imbalance or unfair bias in the training data or in the design of an algorithm that leads to bias in the results. This bias can cause AI to "lie" in favor of certain groups. Make incorrect assumptions or perpetuate stereotypes. For example, if an AI is trained on past employment data that reflects past discrimination, the AI may incorrectly "learn" to continue. that form This gives biased and unfair results.

Q. What are the consequences of AI providing misleading information? A. The consequences of AI providing misleading information can be widespread and significant. This can lead to incorrect and potentially dangerous decisions in important areas such as health care. Misleading AI, law enforcement, and finance can amplify bias. Affecting people's lives destroys reputation and causes distrust in technology. It may also have wider social and ethical implications. This raises concerns about the use and control of AI systems.

Q. Why is context important for AI to provide accurate information? A. Context is important for AI to provide accurate information because, unlike humans, AI does not understand the nuances and complexities of situations in the natural real-world Without context, AI may interpret data literally or fail to connect relevant information. This leads to technically correct conclusions within narrow limits. But it is wrong or misleading in general. Providing a strong context to AI allows it to make more informed and accurate assessments. It's similar to how humans use basic knowledge to make decisions.

Q. How can transparency and accountability in AI development prevent “lies”? A. Transparency and accountability are essential in AI development to prevent systems from producing false or misleading results. Transparency involves documenting and communicating the data sources, algorithms, and decision-making processes used by AI, allowing for inspection and understanding of how the AI reached its conclusions. Accountability means developers and organizations are responsible for the results of their AI systems and are responsible for addressing bias, errors, or unethical behavior. Ensuring that AI systems are transparent and that system creators are held accountable We can build trust and ensure that AI is used responsibly and fairly.

Leave a comment :

Recent post

2025-01-10 10:12:01

2024-05-31 03:06:49

2024-05-28 03:09:25

Tagscloud

Other interesting articles

There are many other interesting articles, try selecting them from below.

2023-10-09 02:23:01

2025-04-01 02:06:08

2025-05-08 03:26:52

2024-01-03 02:09:43

2024-10-10 11:35:58

2025-03-05 01:35:57

2024-01-25 02:08:43

2023-10-02 04:54:18