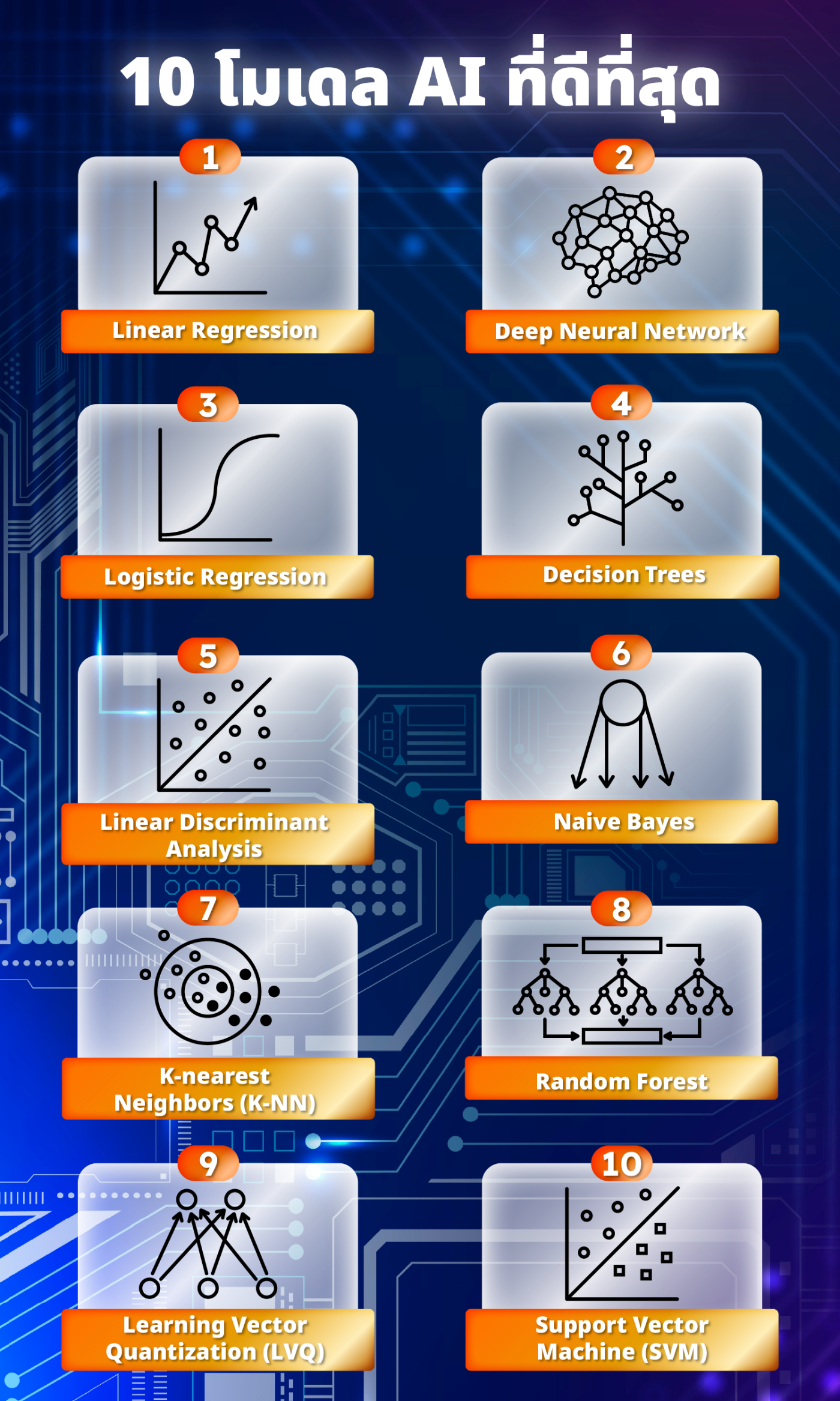

10 best AI models and how they work

2024-03-21 02:39:57

AI models can help analyze various data to study work patterns and make predictions from large amounts of data. For the algorithm to work efficiently, this technology can be modern and take the development of technology to the next level.

Today, many AI models are being developed in various forms. It is widely used to help with complex tasks and data processing. Save a lot of time and reduce costs. AI models help businesses be compatible, profitable and compete effectively By empowering them with clear predictions for a better future. In addition, AI models are a component of software that helps in the system.

The top 10 most popular AI models are:

1. Linear Regression

AI models can create relationships between the independent and dependent variables of a given data to learn through various models. It works in a statistics-based algorithm in which other variables are interconnected and have dependencies. This is widely used in the development of banking, and insurance.

2. Deep Neural Network

It is one of the more interesting AI models inspired by human brain neurons. Deep neural networks have multiple layers of inputs and outputs based on interconnected units called artificial neurons. The main focus of deep neural networks is to support the development of speech recognition, image recognition, and NLP.

3. Logistic Regression

It is a statistical method used for binary classification. It predicts the probability of a binary outcome (e.g. yes/no, win/lose, live/die) based on one or more predictor variables. This is different from linear regression, which predicts continuous outcomes. Logistic regression models the probability of certain classes or events existing, for example, the probability of a team winning a game or a patient having a particular disease.

4. Decision Trees

It is a supervised machine-learning algorithm used for classification and regression tasks. It works by dividing data into smaller parts based on the values of input properties. It breaks down a complex decision-making process into a series of simpler decisions. Therefore, it creates a tree-like structure.

5. Linear Discriminant Analysis

It is a statistical machine-learning technique for classification and dimensionality reduction. It focuses on finding a linear combination of features that best separates two or more classes of objects or events. The basic idea is to project data into a lower dimensional space with a good separation of classes. This avoids over-installation and reduces computational costs.

6. Naive Bayes

Naive Bayes is a simple but powerful probabilistic classifier. It uses Bayes' theorem with the assumption of strong (naïve) independence between features. This is especially true for large data sets. It works surprisingly well on text classification problems like spam filtering and sentiment analysis. Despite its simplicity, Naive Bayes performs better than more complex classification methods.

7. K-nearest Neighbors (K-NN)

K-nearest Neighbors is a classification algorithm. A simple but powerful non-parametric (and regression) model. It works on a very straightforward principle: find 'k' closest data points (neighbors) in the training dataset for a given data point. It determines the most common labels. (in classification) or the average/median (in regression) results of these neighbors to a new point.

8. Random Forest

Random Forest is an ensemble learning technique for classification and regression tasks. It is built on the concept of decision trees. Yet, instead of relying on a single decision tree, Instead, they created many trees during their training time. It exports the mode of the class. (for classification) or average prediction (for regression) of each tree Random Forest introduces randomness in two ways:

-by sampling data points (with transitions) to train each tree (bootstrap or bagging)

-By randomly selecting a subset of features at each intersection point of the tree.

9. Learning Vector Quantization (LVQ)

Learning Vector Quantization (LVQ) is a prototype-based supervised learning algorithm often used for classification tasks. This is done initially by setting up a set of master vectors. and make repeated adjustments To show the distribution of different classes. in better training data During the training process, The algorithm compares training samples with these prototypes. If the training samples are classified incorrectly The closest but incorrect class prototype is removed from the instance. And the prototype of the correct class will be moved closer.

10. Support Vector Machine (SVM)

It is a general-purpose supervised learning algorithm widely used for classification and regression tasks. Find the most appropriate hyperplane or set of hyperplanes in a high-dimensional space that separates classes. It is best obtained by the maximum margin. Simply put, SVM looks for the best decision boundary that is furthest from the nearest data point of any class. That is called the greatest support vector.

Leave a comment :

Recent post

2025-01-10 10:12:01

2024-05-31 03:06:49

2024-05-28 03:09:25

Tagscloud

Other interesting articles

There are many other interesting articles, try selecting them from below.

2024-02-23 05:19:59

2025-03-31 03:30:20

2024-10-10 10:32:48

2025-03-14 10:41:27

2024-05-06 05:14:24

2024-08-13 01:45:34

2024-01-19 05:01:12