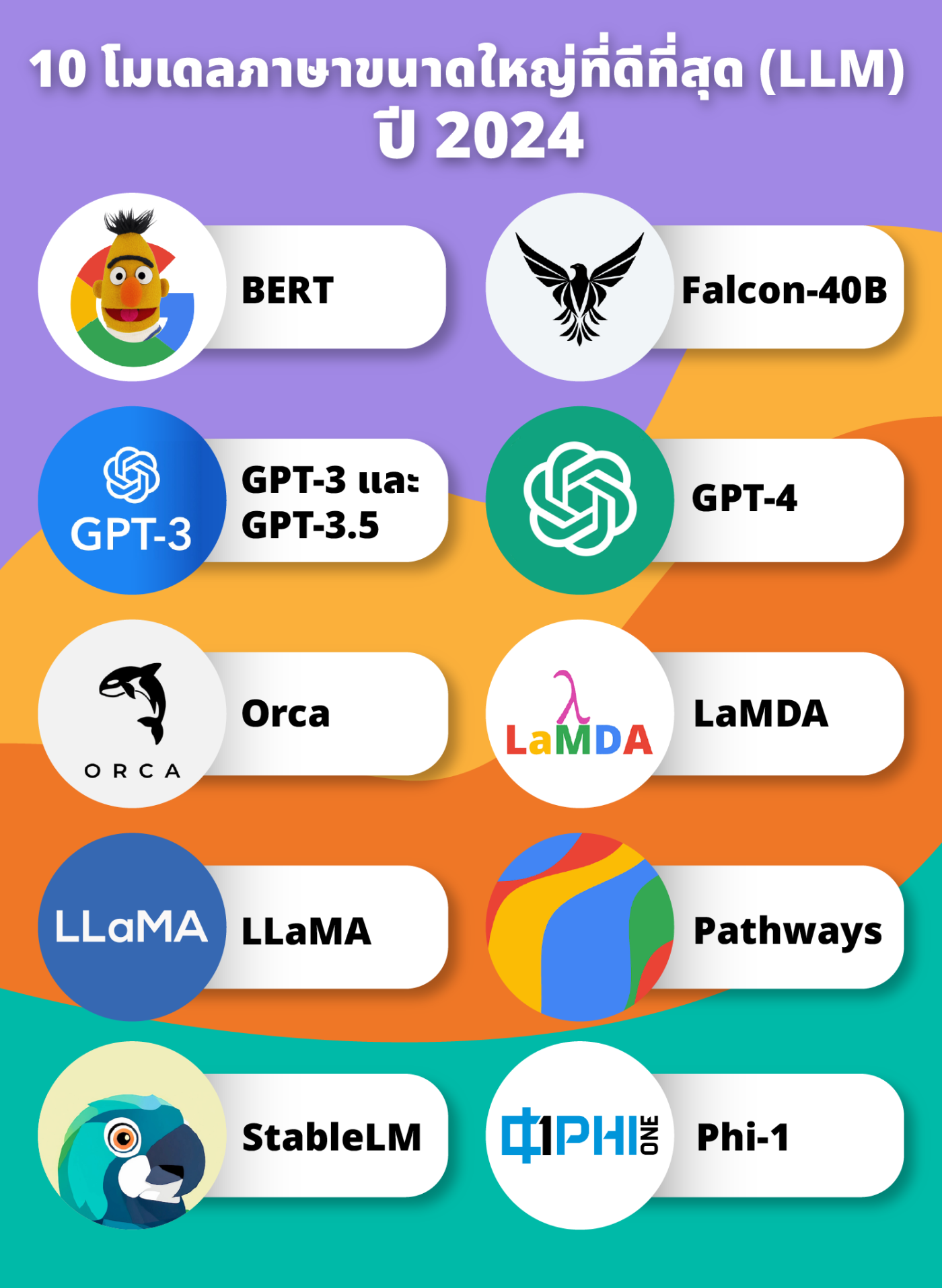

10 Best Large Language Models (LLM) of 2024

2024-03-22 03:10:58

Large-scale language models (LLMs) are deep learning algorithms that can work. Natural language processing (NLP) is a versatile, large-scale language model based on Transformer models and is trained using large datasets and is therefore large. This allows them to recognize, translate, predict, or create text or other content and is part of the artificial intelligence model.

The best large language models of 2024, along with their pros, cons, and practical applications.

1. BERT

It's a language model that Google launched in 2018. It was initially implemented for English in two models trained on English Wikipedia (2.5 billion words) and Toronto BookCorpus (800 million words) using Transformer, which is A mechanism that learns the contextual relationships between words, Transformer has two mechanisms: an encoder for reading text input; and a decoder that creates predictions for tasks.

strength

-High accuracy for natural language processing (NLP) tasks

-free

- Low memory requirements

-Suitable for job classification

-Easy to deploy and customize

weakness

-Limited understanding of context

-Cannot handle multiple inputs

- Lack of message creation

-Limited support for non-English languages.

-Fine-tuning can take a long time.

Usage

-summarizing the message

-Draw valuable information from biomedical literature for research.

-Find high-quality relationships between medical concepts.

2.Falcon-40B

It is an open-source LLM with 40 billion parameters. It is available under the Apache 2.0 license. It is available in two variants: Falcon-1B (one billion parameters) and Falcon-7B (seven billion parameters), of which Falcon-40B is trained on language modeling tasks. general This means that it works by predicting the next word. Its architecture is similar to the GPT-3 language model.

strength

-Open for commercial and research use.

-Use custom pipelines to curate and process data from a variety of online sources. This is to ensure that LLM has access to a wide range of relevant information.

-Fewer calculation requirements

-Provides a more interactive and engaging experience than the GPT series because it is more conversational.

weakness

-Fewer parameters than the GPT series

-Only supported in English, Spanish, German, French, Italian, Polish, Portuguese, Czech, Dutch, Romanian, and Swedish.

Usage

-Analysis of medical literature

-Analysis of patient records

-Sentiment analysis for marketing

-Translation

-Chatbot

-Game development and creative writing

3. GPT-3 and GPT-3.5

Launched in 2020, the OpenAI model has been trained on billions of words. Familiarize yourself with human language. Like the other LLMs on this list, it is a creative AI model that independently creates language and interacts with users.

strength

-Increase creativity and productivity

-The basic version is free.

-Cost-effective for full use if you don't have a lot of work.

-Requires minimum infrastructure

-Minimal employee training

weakness

- Expensive for high usage

-May reveal sensitive information

-No ongoing training — Data source ends with 2021 data.

-hallucinations (false information that deviates from contextual logic or external facts)

Usage

Companies can also use GPT-3 and 3.5 for the following applications:

-Customer service

-Chatbot

-Content creation Including blog posts Long-form content YouTube video scripts, advertising and marketing copy, and product description

-Virtual Assistant

-Translation

-Encryption

-summary

-Creating risk levels

-Game design and creative writing

4. GPT-4

GPT-4 is the largest version of OpenAI GPT. Unlike its predecessor, it can process and generate both images and language. There is also a system message that allows the user to specify the task and tone. Starting in August 2023, GPT-4 will be available in ChatGPT Plus, powered by Microsoft Bing search, and will eventually be integrated into Microsoft Office products.

strength

-Can create language and pictures

-Improved creativity This includes the ability to create compelling and coherent stories.

- Cost-effective and scalable

-Can be a personalized

weakness

-Create completely crafted messages.

-Inability to generate entirely new ideas or make predictions.

-Lots of training data are required to generate high-quality code. This can be difficult for small software companies.

5. Orca

It was created by Microsoft and has 13 billion parameters. It leverages ChatGPT's capabilities and maps up to GPT-3.5 for most tasks. Orca is a smaller version of GPT-4. Use the teacher's help and progressive learning from GPT-4 to imitate human reasoning.

strength

-More powerful than other open-source models.

-Progressive learning style helps Orca build on his knowledge little by little.

-Works on laptop

-Help teams learn about complex concepts like legal reasoning and financial planning.

weakness

-In-progress training

-Requires a large amount of processing resources

-Orca using CPU is slower compared to GPU accelerated setup.

Usage

-Create scripts, content, code.

-Brainstorm ideas for projects

-Help computers reason about complex topics such as law and financial planning. and medical diagnosis

6.LaMDA

The Language Model for Conversation Applications (LaMDA) is a group of LLMs created by Google Brain. It was developed and first released as Meena in 2020. Bard was initially Google's experimental AI chat service. Based on the LaMDA LLM model, LaMDA is similar in functionality to BERT and uses only the decoder transformer language model. Receive pre-training on the text corpus. Including conversations and documents containing 1.56 trillion words.

strength

-Able to have realistic conversations with users due to training in conversation.

-Continuously retrieve data from the internet

-Expert in conversation

weakness

-Not much is known about LaMDA.

-Only approved teams and individuals can test.

Usage

Organizations can use LaMDA for customer support chatbots, research, and brainstorming. and organizing information

7.LLaMA

Large Scale Language Model Meta AI (LLaMA) is a language model developed by Meta. LLaMA is currently a large open-source language model. Initially released only to approved developers and researchers, LLaMa models come in various sizes, including a smaller version that uses less processing power. The largest version has 65 billion parameters.

strength

-There are many sizes to choose from.

-More effective than other LLMs

- Uses fewer resources than other models.

weakness

-Limited customization for developers

-Non-commercial license only. This means that you cannot use it for commercial applications such as marketing or software development.

Usage

Companies often use LLaMA to build chatbots, summarize messages, and create content. It can also be used for research purposes. This is especially true if researchers need to quickly and efficiently test and train LLM models.

8. Pathways

PaLM is a language model that uses a 540-billion parameter transformer created by Google AI. Palm has a smaller version. It includes eight billion and 62 billion parameter models. Like the popular large language models on this list, PaLM is a Transformer model that works by learning to express relationships between phrases and words in sentences.

strength

-Available in smaller sizes

-Supports more than 100 languages

-Powerful code generation and reasoning abilities

-Seamless integration with the Google ecosystem

-Controllable Outcomes — Users have more control over the tone, style, and desired outcome of their generated messages.

weakness

-Less environmentally sustainable than other models.

-Large size

-Not best suited for people and companies that value efficiency.

Usage

Companies can use PaLM for coding. Complex problem-solving, computation, and translation

9.StableLM

It is an open-source model designed for various natural language processing tasks. Anyone can use and edit it without any restrictions. Interested developers can download it from GitHub in model sizes of three to seven billion parameters. It leverages the power of five state-of-the-art open-source datasets built specifically for Conversational LLM: Dolly, HH, Alpaca, GPT5All, and ShareGPT. As a result, StableLM is better than its competitors in long-running Conversations.

strength

-Open-source language

-Highly customizable

-Effective

-Include text as a picture

-Contains beta software public demonstration and downloading complete models for use on-premises or in the cloud.

weakness

-Not good at creative writing

-There may be bias due to training on experimental data sets.

-Requires technical expertise to set up.

Usage

Companies often use StableLM to create images. Brainstorm ideas for projects. From stand-up comedy to YouTube tutorials, coding, and organizing tasks.

10.Phi-1

Microsoft's latest version is Phi-1. With just 1.3 billion parameters, This lightweight language model outperforms GPT-3.5 using high-quality data sources such as StackOverflow and Stack datasets. Like BERT and others, Phi-1 is a Transformer-based large-scale language model.

strength

-High-quality information and responses.

-Less processing resources due to fewer parameters

-More responsive and engaging than GPT-4.

weakness

-This is a new LLM and is in progress.

-Lack of domain-specific knowledge about the larger LLM, such as coding with a specific API.

-Phi-1 doesn't always understand input errors or style changes in prompts. Because there are fewer parameters

Usage

Companies can use Phi-1 for chatbots, translation, summarization, and coding.

Leave a comment :

Recent post

2025-01-10 10:12:01

2024-05-31 03:06:49

2024-05-28 03:09:25

Tagscloud

Other interesting articles

There are many other interesting articles, try selecting them from below.

2024-01-23 01:35:21

2023-09-14 03:30:33

2025-03-14 02:33:37

2023-10-11 01:09:56

2023-11-10 10:20:40

2024-08-07 09:51:16

2024-04-23 05:12:21

2023-12-27 01:48:59