How Stable Cascade Works

2024-03-25 01:42:28

After Stability, Stable Cascade was launched in a research preview. This innovative text-to-image model offers an interesting three-step approach. It sets new standards for quality, flexibility, and fine-tuning. and efficiency It focuses on removing additional hardware barriers.

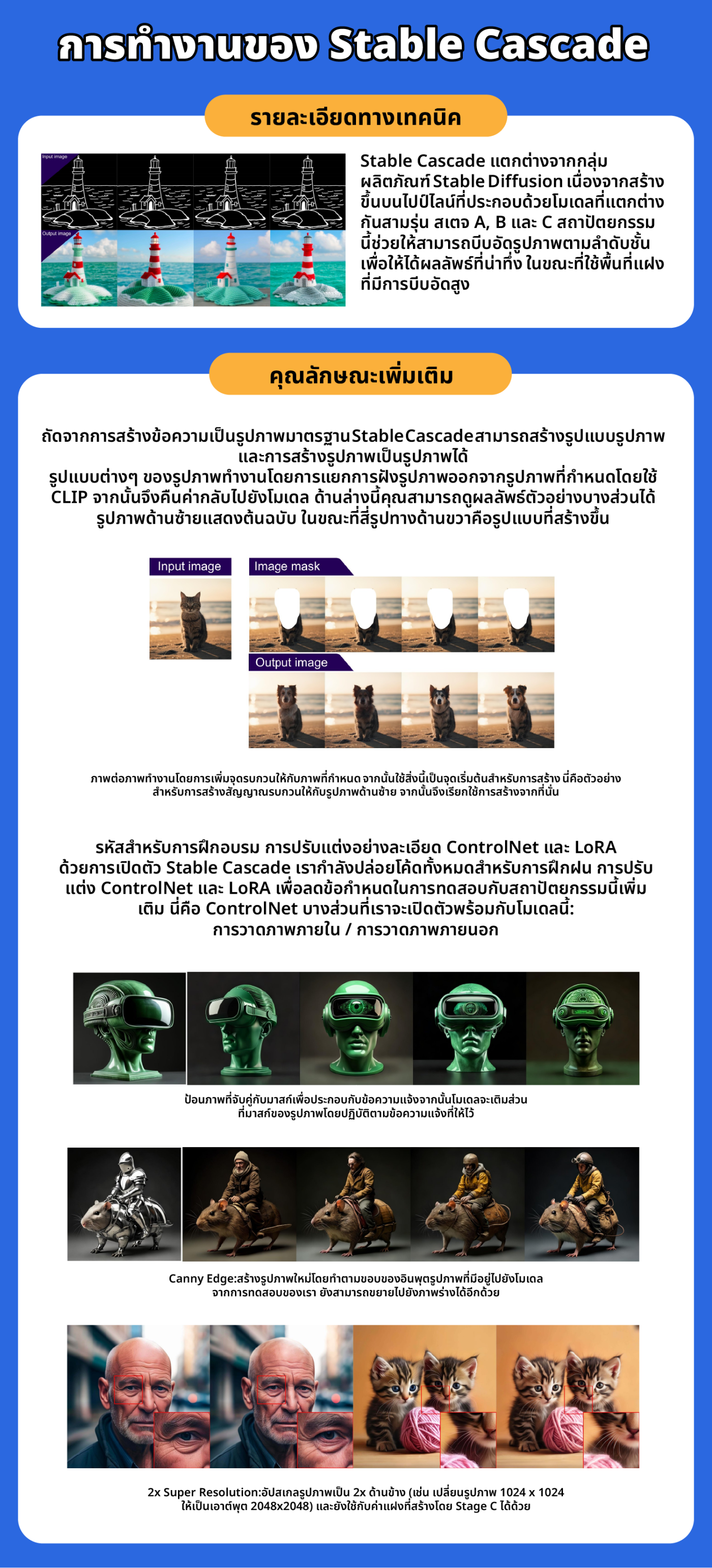

Technical details

Stable Cascade differs from the Stable Diffusion product family because it is built on a pipeline consisting of three different models, Stages A, B, and C. This architecture allows for hierarchical image compression. To get amazing results while using a highly compressed latency space. Let's look at each step to understand how it all comes together:

The latent generator phase, phase C, converts the user input into a compact 24x24 latency that is passed to the latent decoder phase (phases A and B), which is used to compress the image. It is similar to the work of VAE instability. diffusion but achieves much higher compression.

By separating the conditional text generation (stage C) from decoding into high-resolution pixel space (stages A and B), we can allow additional training or refinement, including ControlNets and LoRA, to be completed. Stand-alone model on stage C. This achieves a 16x cost reduction compared to training a Stable Diffusion model of similar size. (As shown in the original report ) Stages A and B can be customized for additional control. However, it is comparable to the VAE tuning in the Stable Diffusion model for most applications. It will provide minimal additional benefit. We recommend practicing Stage C and using Stages A and B in their original state.

Stages C & B will be released with two different models: Parameters 1B & 3.6B for Stage C and Parameters 700M & 1.5B for Stage B. It is recommended to use the 3.6B version for Stage C as it has quality output. Maximum. However, the 1B parameter version can be used for those who want to focus on the most minimal hardware requirements. For step B, both achieve excellent results. However, 1.5 billion chunks are better at reconstructing small details. Thanks to Stable Cascade's modular approach, the expected VRAM requirements for inference can be kept at approx. 20GB, but can be reduced further by using a smaller version (As mentioned earlier This may reduce the quality of the final output.)

Additional features

-Next to standard text-to-image generation, Stable Cascade can create image formats and image-to-image generation.

Image variations work by extracting image embeddings from a given image using CLIP and then returning them to the model. Below you can see some sample results. The picture on the left shows the original. while the four images on the right are the generated patterns.

-Picture Collage works by adding noise to a given image. Then use this as a starting point for building. Here is an example of creating noise in the image on the left. Then run the build from there.

-Code for training Fine-tuning ControlNet and LoRA

With the release of Stable Cascade, we are releasing all code for training, tuning ControlNet, and LoRA to further reduce testing requirements with this architecture. Here are some of the ControlNets we will be releasing with this model:

Inner Drawing / External Drawing: Enter an image paired with a mask to accompany the prompt. The model then fills in the masked portion of the image following the prompts provided.

Details about these can be found on the Stability GitHub page, including training code and inference.

Although this model is not currently commercially available, what if you want to try out other image models? ours for commercial use Please visit the Stability AI Membership page for self-hosted commercial use. or our developer platform to access our API.

Leave a comment :

Recent post

2025-01-10 10:12:01

2024-05-31 03:06:49

2024-05-28 03:09:25

Tagscloud

Other interesting articles

There are many other interesting articles, try selecting them from below.

2024-04-19 03:03:34

2024-04-03 05:56:16

2025-02-18 10:31:34

2024-01-19 04:47:28

2023-10-18 04:33:37

2024-09-27 09:15:29

2024-04-26 03:25:28

2025-05-06 07:12:00